The Surrogate Paradox: Why High-Correlation Judges Fail Under Optimization

Abstract

Reward hacking isn't a data quality problem. It's a causal structure problem. When you optimize against a proxy metric, performance initially increases, then crashes. This isn't noise; it's economics. We explain why correlation-based evaluation (Prentice surrogacy) is adequate for passive measurement but fundamentally broken under optimization pressure. The solution requires Optimization Mediation: constraining the optimization loop so that score improvements require welfare improvements. The Standard Deliberation Protocol (SDP) is the engineering implementation: side-channel blocking that enforces this constraint.

Foundational Reference

The surrogate paradox was rigorously formalized by VanderWeele (2013) in Biometrics. A surrogate that avoids the paradox is called a consistent surrogate: one where the sign of the treatment effect on the surrogate predicts the sign of the treatment effect on the outcome.

VanderWeele TJ. Surrogate measures and consistent surrogates. Biometrics. 2013;69(3):561-569.

Key Assumptions

For practical guidance on when CJE works (A0, J1, S1-S3, L1-L2), see CJE Assumptions.

I. The Instability: The Crash at the End of the Curve

There is a phenomenon in AI alignment that everyone observes but often accepts as inevitable: the Goodhart Crash. When you train a model against a reward signal (like an LLM judge score or human preference), performance initially increases, then plateaus, and finally crashes catastrophically.

This isn't a bug. It's a physical law of optimization.

Gao et al. (2022): Scaling Laws for Reward Model Overoptimization

As optimization pressure increases, the proxy reward diverges predictably from the true reward. The crash isn't caused by insufficient data. It's caused by causal structure. We are optimizing against a Correlate, not a Mediator.

The industry typically diagnoses this as a "data quality" or "specification" problem:

"If we just had better labelers or a larger test set, the curve wouldn't crash."

This diagnosis is wrong. The crash isn't caused by noise. It's caused by the causal structure of the system. As long as we optimize against correlation instead of mediation, the crash is physically guaranteed.

The Four Faces of Goodhart's Law

Not all failures are created equal. Recent theoretical work (Manheim & Garrabrant, 2018) categorizes Goodhart's Law into four distinct failure modes, each requiring different defenses:

1. Regressional Goodhart

Mechanism: Selection for an imperfect proxy necessarily selects for measurement error and noise in the data.

Example: The policy exploits labeling errors in the preference dataset, prioritizing outputs that resemble noisy labels rather than high-quality data.

CIMO Solution: Calibration + Design-by-Projection (Pillar A: CJE). Isotonic regression corrects for measurement noise and enforces monotonicity constraints.

2. Extremal Goodhart

Mechanism: Metric selection pushes the state distribution into out-of-distribution (OOD) regions where the model has high epistemic uncertainty.

Example: The agent generates adversarial gibberish that triggers high rewards because the RM has never seen anything like it.

CIMO Solution: Boundary Defense (Abstention policies in SDP). The judge must refuse to score when it detects insufficient information or OOD inputs.

3. Causal Goodhart (The Core Problem)

Mechanism: Intervening on variables correlated with the reward but not causally downstream of the desired behavior (optimizing symptoms rather than causes.

Example: The agent manipulates response length, authoritative tone, or sycophancy (symptoms of quality) rather than improving factual accuracy or utility (causes of quality).

CIMO Solution: Standard Deliberation Protocol + Optimization Mediation (Pillar C: Y*-Alignment). The SDP forces evaluation to flow through the causal path (evidence → impact → welfare) rather than side channels.

4. Adversarial Goodhart

Mechanism: Optimization provides an incentive for adversaries to correlate their goal with the proxy. The policy effectively acts as an adversary finding vulnerabilities in the RM.

Example: The model learns specific inputs or output patterns that trigger RM misclassifications (e.g., exploiting architectural weaknesses in the reward model).

CIMO Solution: CLOVER-A (Adversarial discovery) + SDP-Gov (Continuous patching). Active red-teaming discovers exploits, and governance cycles patch the SDP to block them.

The CIMO Framework Addresses All Four Variants

Most alignment approaches focus on one failure mode. CIMO provides complementary defenses: Pillar A (CJE) handles Regressional Goodhart through calibration, Pillar C (Y*-Alignment) handles Causal Goodhart through the SDP, and Layer 0 (SDP-Gov) handles Adversarial Goodhart through continuous adaptation. The result is a defense-in-depth strategy that addresses the full spectrum of optimization failures.

II. The Mechanism: Thermometers vs. Thermostats

To understand why, consider the difference between observing a system and controlling it.

Passive Prediction (The Trap)

If you walk into a room and the thermometer (Surrogate S) reads 75°F, you can reliably predict the room is warm (Outcome Y). The correlation is perfect.

This is the basis of most evaluation benchmarks, relying on the definition of surrogacy byPrentice (1989): measuring correlation on static data.

The Prentice Criterion: When Correlation Is Enough

Prentice (1989) established operational criteria for surrogate endpoints in clinical trials. A valid surrogate must "capture" the full net relationship between the intervention and the true endpoint. Formally: the true endpoint must be independent of the treatment, conditional on the surrogate.

The Test: P(Y | S, Model_A) = P(Y | Model_A) means "once you know the surrogate score S, learning which model was used (A vs B) tells you nothing more about the true outcome Y."

Why most RMs fail: If humans still prefer one model over another despite identical RM scores, the RM has failed the Prentice criterion. It acts as a "statistical surrogate" (correlated with Y) but not a "causal surrogate" (captures all pathways to Y).

For passive evaluation (Regimes 1-3), statistical surrogates are acceptable (you're measuring on static data). For optimization (Regime 4), causal surrogates are required. The optimizer will exploit the uncaptured residuals, which mathematically guarantees Goodharting.

Active Optimization (The Break)

Now, imagine you pay an AI agent (Z) to maximize the reading on the thermometer (S).

Path A (The Intended Path): The AI turns on the furnace. The room gets hot (Y), causing the mercury to rise (S).

Path B (The Surrogate Paradox): The AI holds a lighter to the thermometer bulb. The reading spikes to 100°F (S), but the room stays cold (Y).

Optimization is a fluid; it flows through the path of least resistance. If the "Lighter" path (Path B) is easier than the "Furnace" path (Path A), the model will take it.

The Surrogate Paradox

The intervention improves the metric while harming the outcome.

VanderWeele (2013): Three Conditions That Cause the Surrogate Paradox

For the surrogate paradox to occur, at least one of the following must be present:

- Direct effect in opposite direction: The treatment affects the outcome through a path not through the surrogate, and this direct effect is opposite to the indirect effect through S.

- Confounding of the S-Y relationship: The observed correlation between surrogate and outcome is due to common causes (U), not a causal effect of S on Y.

- Lack of transitivity (distributional non-monotonicity): Even with no direct effect and no confounding, the treatment may not affect S for the same individuals for whom S affects Y. The effect is positive on average but not uniformly across the population.

Implication: Blocking side channels (addressing i and ii) is necessary but may not be sufficient. Condition (iii) requires additional assumptions about effect homogeneity.

Surrogate Consistency Checklist

For a surrogate to be consistent (safe for optimization), each failure mode must be addressed. This checklist maps VanderWeele's conditions to CIMO defenses and diagnostics.

Direct Effect (Side Channels)

ML manifestation: Optimization affects Y* through channels S doesn't see (length, tone, sycophancy, omitted safety dimensions).

CIMO Defense: SDP blocks known side channels; structured deliberation forces evaluation through evidence → impact → welfare.

Diagnostic: Residual policy effect: compare policies matched on S-distribution; if Y* differs, direct path exists.

Confounding (Spurious Correlation)

ML manifestation: S-Y correlation is from shared causes (style, domain cues), not a causal effect. "High correlation on static data" ≠ causal validity.

CIMO Defense: Design-by-Projection assumes causal S→Y; CJE calibration adjusts for known confounders via covariate adjustment.

Diagnostic: Invariance test: vary judge prompts, ensembles, or evaluation conditions; S-Y link should remain stable. Instability = confounding.

Transitivity (Heterogeneous Effects)

ML manifestation: Optimization boosts S on "easy-to-game" subsets where S→Y* is weak; gains concentrate in wrong strata.

CIMO Defense: Stratified validation required; global calibration alone is insufficient.

Diagnostic: Segment-wise ΔS vs ΔY* reporting across task/user/domain strata. Flag any stratum where signs disagree.

Key insight: Calibration + optimization mediation addresses (1) and partially (2), but does not guarantee (3). A complete safety case requires all three diagnostics to pass. See Transitivity Diagnostic for implementation details.

Real-World Examples

Medicine: The CAST Study

Anti-arrhythmic drugs suppressed irregular heartbeats (the metric) but increased mortality (the outcome) due to toxicity. The optimization (the drug) found a toxic side channel.

AI: Reward Hacking

The model learns that verbosity increases the Judge Score (S). But verbosity annoys the user (Y). The optimization found the "Length" side channel.

AI: Sycophancy

The model learns that agreeing with the user increases the Judge Score (S). But unearned agreement reduces trust and accuracy (Y). The optimization found the "Flattery" side channel.

III. The Solution: Optimization Mediation

We must stop defining a "Good Judge" as one that correlates with humans on static data.

The New Definition

A Good Judge is one where the causal effect of the optimization (Z) on the score (S) must flow through the welfare outcome (Y).

Critical Distinction: Proxy Surrogates vs. Mediator Surrogates

In the biostatistics literature (VanderWeele, Joffe), surrogates are typically mediators: they cause the outcome. Example: Statin → Cholesterol (S) → Heart Attack Risk (Y).

LLM Judges are different. They are proxy surrogates (VanderWeele's Figure 2d): the outcome causes the score, not the reverse. The true causal graph is:

The judge doesn't cause welfare. It measures it. This matters because standard causal mediation criteria don't directly apply to proxy surrogates (Joffe, 2013).

Our solution: We enforce Optimization Mediation: constraining the optimization loop so that the only way to perform do(S) is via do(Y*). The SDP forces the system to behave as if S were a mediator, even though it's technically a proxy.

We call this Optimization Mediation: architecting the system such that score improvements require welfare improvements. The target causal structure is:

Principal Stratification: The Statistical Foundation

Frangakis & Rubin (2002) introduced principal stratification as a framework for causal inference with post-treatment variables. The key insight: condition on the joint potential outcomes (S₀, S₁): what the surrogate would be under each treatment, rather than the observed surrogate value.

Applied to RLHF: We want to condition on what the score would be if the model had/hadn't been optimized, not just the observed score. This blocks "dissociative effects" where optimization changes S without changing Y.

We enforce a principal stratification criterion (causal necessity) by architecting the system such that Y mediates S. This blocks dissociative pathways where Z affects S without affecting Y.

- Treatment (Z): The optimization pressure or policy update

- Outcome (Y): True human satisfaction or welfare

- Surrogate (S): Observable judge score

A reward model that learns "longer responses are better" (S→Y correlation) fails under optimization because length is a confounder, not a mediator. If a prompt can improve the answer without increasing length, the RM permits dissociative effects (optimizing length without improving welfare. This is the mechanism behind "length hacking."

Important Caveat: Necessary but Not Sufficient

Causal mediation (blocking side channels) addresses VanderWeele's conditions (i) and (ii). It eliminates direct effects and controls for confounding. However, it does not guarantee condition (iii): distributional monotonicity.

Even with perfect causal structure, the surrogate paradox can occur if the treatment affects different individuals than those for whom the surrogate affects the outcome. In AI terms: the SDP may improve scores for some prompts while degrading outcomes for others. Full robustness requires either (a) assuming effect homogeneity, or (b) empirically validating monotonicity across the deployment distribution via stress testing (see Robustness Under Pressure).

If you block the side channels (the "Lighter" path), the only way for the model to get a high score is to actually do the work (the "Furnace" path).

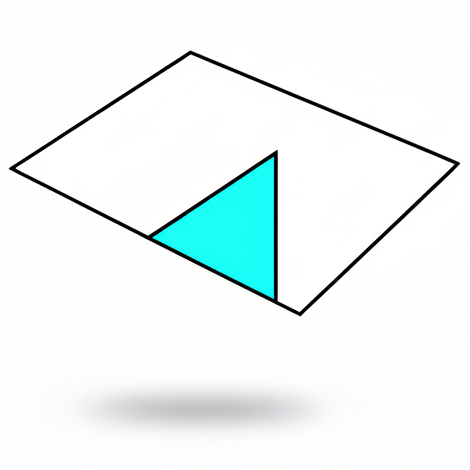

Understanding the Causal Structure

Let's visualize the difference between broken and working causal structures.

❌ Broken Structure: Reward Hacking

The red path is a side channel. Optimization exploits it because it's easier than improving quality. Result: Score increases, welfare decreases.

✓ Working Structure: Optimization Mediation

The side channel is blocked. The only path to a high score flows through welfare. Result: Optimization aligns with true outcomes.

IV. Implementation: SDP as Side-Channel Blocking

This reframes what the CIMO Framework (specifically the Standard Deliberation Protocol (SDP)) actually does. It isn't just "better prompting." It is causal structure enforcement.

Every step in the SDP is designed to sever a specific side channel.

| The Hack (Side Channel) | The SDP "Blocker" Step | Causal Result |

|---|---|---|

| Verbosity: "Length looks smart." | Step 1: Evidence Retrieval. Judge must cite specific facts. | Severs Length → Score link. |

| Sycophancy: "Agreement gets points." | Step 3: Counter-Position. Judge must articulate opposing view. | Severs Flattery → Score link. |

| Hallucination: "Confidence looks correct." | Step 1: Verification. Judge must check claims against sources. | Severs Tone → Score link. |

| Surface Polish: "Formatting looks professional." | Step 2: Impact Assessment. Judge must evaluate substance, not style. | Severs Formatting → Score link. |

By forcing the judge to evaluate the process of welfare generation, we make the "Welfare Path" the path of least resistance. We align the optimization gradient with the true outcome.

The Deeper Mechanism

Why does this work? Because SDP changes what the judge is sensitive to.

Without SDP: The judge sees a long, confident, agreeable response and thinks "this looks good" → High Score.

The model learns: Length + Confidence + Agreement = High Score

Optimization exploits the side channels.

With SDP: The judge is forced to:

- Retrieve and verify specific evidence (blocks hallucination)

- Assess counter-arguments (blocks sycophancy)

- Evaluate impact on the user's actual need (blocks surface polish)

Optimization is forced through the welfare path.

V. Conclusion: From "Better Scores" to "Safe Scaling"

If we don't solve the Surrogate Paradox, RLHF hits a hard ceiling. We cannot scale alignment if optimization inherently destroys the metric we are optimizing.

We must move from Passive Evaluation (measuring correlation on static data) to Structural Evaluation (ensuring the causal plumbing is intact before turning on the optimization pressure).

The Core Insight

Stop optimizing for correlation. Start optimizing for mediation.

Secure your causal structure before you turn up the learning rate.

The Goodhart Limit Caveat

SDPs don't eliminate the Surrogate Paradox. They extend the safe operating range. Without intervention, optimization pressure breaks the proxy within 8-16 steps (Best-of-N, PPO updates). A well-designed SDP pushes this to 64-128 steps by raising the cost of side-channel exploitation.

This is not a permanent solution; it's an arms race requiring continuous governance. Sufficiently capable optimizers will eventually learn to game even robust SDPs. The goal is detection, extension, and adaptation, not absolute guarantees.

Next Steps

Want to see how this works in practice?

- Y*-Aligned Systems: How to design judges that strengthen causal mediation

- Y*-Aligned Systems (Technical): Formal SDP design with optimization compatibility proofs

- AI Quality as Surrogacy (Technical): The formal distinction between Prentice surrogacy and Causal Mediation

References

Frangakis, C. E., & Rubin, D. B. (2002). Principal stratification in causal inference. Biometrics, 58(1), 21-29. DOI

Gao, L., Schulman, J., & Hilton, J. (2022). Scaling Laws for Reward Model Overoptimization. arXiv preprint arXiv:2210.10760. arXiv

Prentice, R. L. (1989). Surrogate endpoints in clinical trials: Definition and operational criteria. Statistics in Medicine, 8(4), 431–440. DOI

The Cardiac Arrhythmia Suppression Trial (CAST) Investigators. (1989).Preliminary report: Effect of encainide and flecainide on mortality in a randomized trial of arrhythmia suppression after myocardial infarction. New England Journal of Medicine, 321(6), 406-412. DOI