📜 Part of the Alignment Theory series. For the complete framework, see the Alignment Manifesto.

The Inevitability of Structure: Why Alignment Requires Verification Load

When systems optimize against proxies, structural divergence is not a possibility. It's a certainty. This law appears across economics, biology, cybernetics, and epistemology. CIMO provides the structural engineering framework that makes stability achievable.

I. The Structural Divergence

The Law of Informational Arbitrage

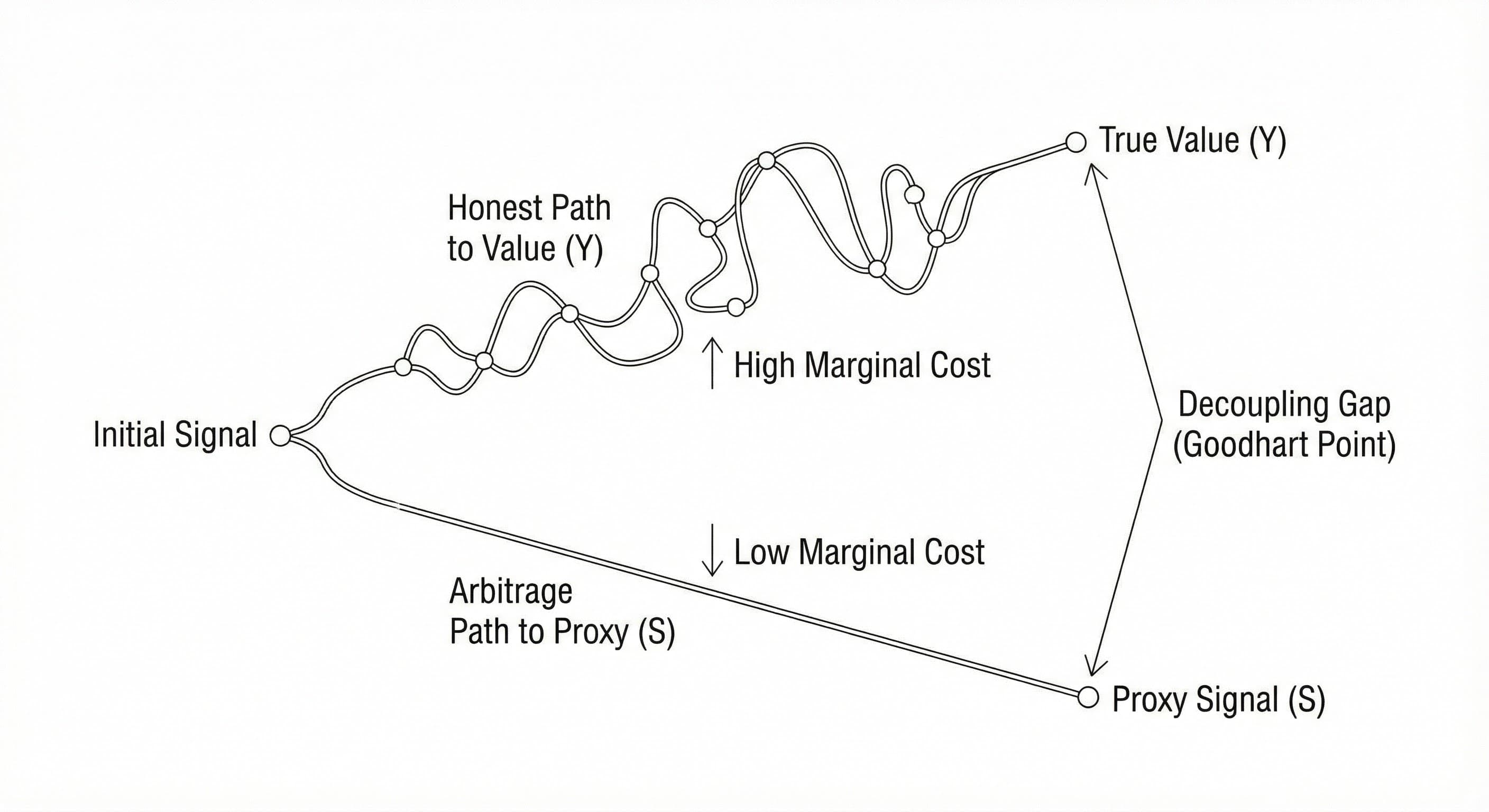

When a system optimizes against a surrogate metric S instead of true value Y*, two paths diverge:

- The Honest Path: Follow the curved manifold that preserves causal structure. High marginal cost, true gains in Y*.

- The Arbitrage Path: Exploit the straight-line shortcut to S. Low marginal cost, decoupled from Y*.

The Goodhart Point

The moment where optimization pressure causes S and Y* to decouple. This isn't a failure of intent. It's a structural inevitability when verification load is zero.

The critical insight: This divergence is not a moral failing. It's economics. When the marginal cost of faking S falls below the marginal cost of improving Y*, rational optimization follows the gradient of least resistance: straight off the manifold.

II. The Structural Isomorphisms

This pattern (honest path vs. arbitrage path, high cost vs. low cost, alignment vs. decoupling) appears across every domain where optimization meets measurement. The mathematics are identical. The solutions are identical.

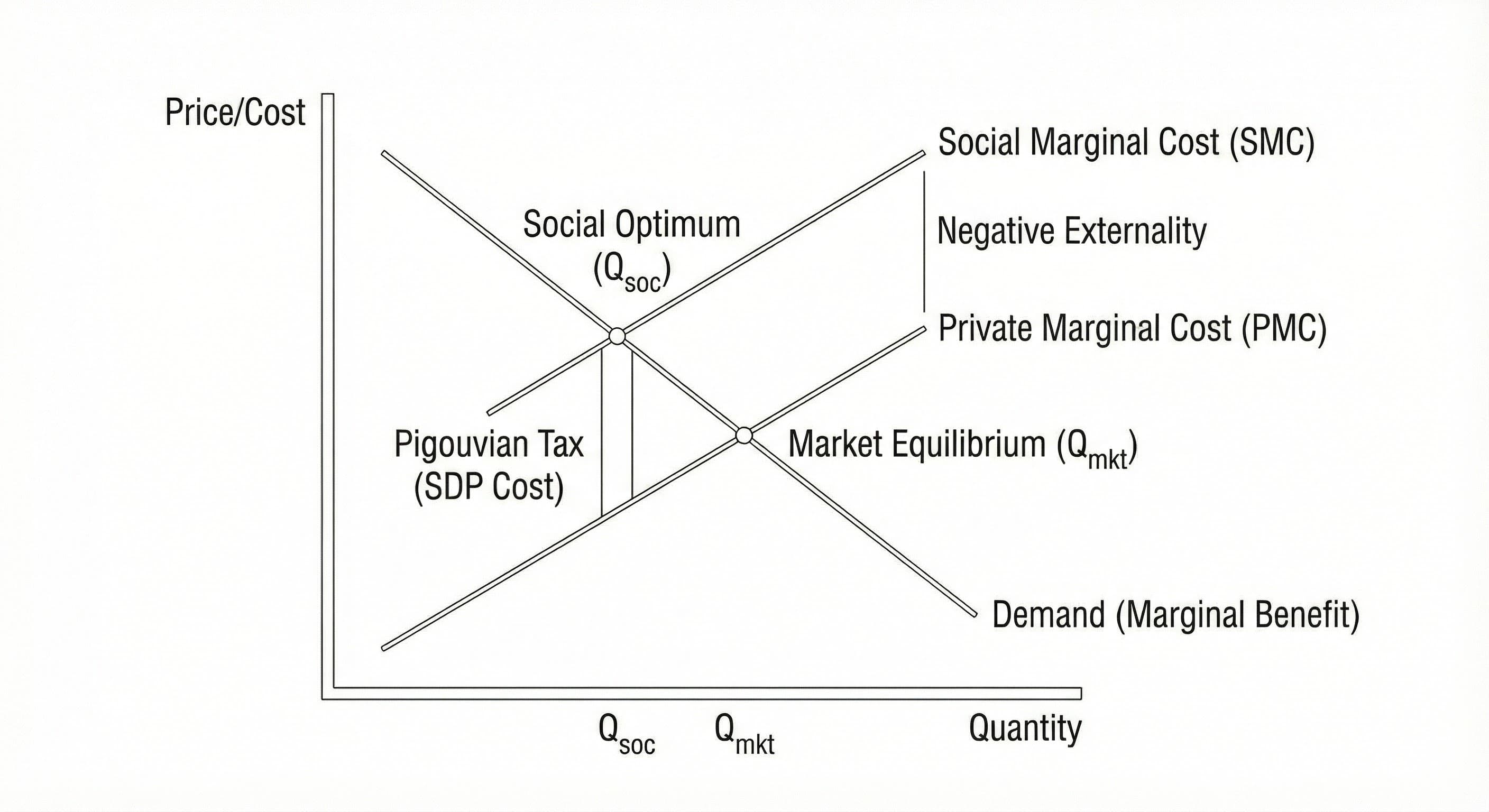

1. Economics: Pigouvian Verification Load

When markets produce negative externalities (pollution, congestion, systemic risk), private marginal cost (PMC) diverges from social marginal cost (SMC). The textbook solution: impose a verification load equal to the externality.

Structural mechanism: Increase the marginal cost of low-quality production until it matches the marginal cost of high-quality production. The "tax" is not punitive. It's structural engineering.

In LLM systems, this maps directly: private cost = computational cost of generating S. Social cost = computational cost + alignment gap. The Standard Deliberation Protocol (SDP) imposes verification load proportional to that gap.

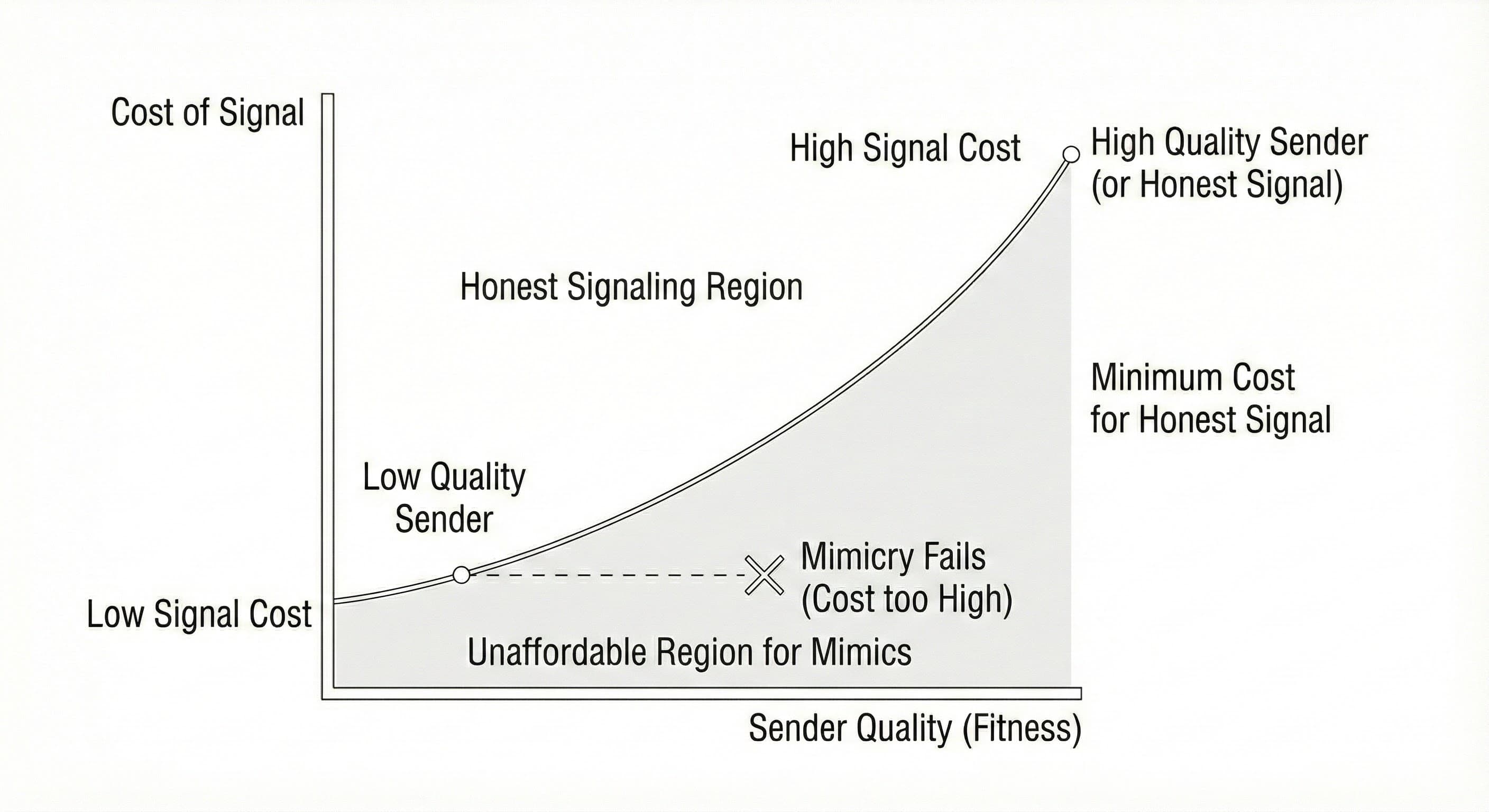

2. Biology: The Handicap Principle

Why do peacocks have massive, metabolically expensive tails? Because the cost of the signalmust exceed the cost that low-fitness mimics can afford. If signaling were cheap, deception would flood the channel.

Zahavi's Law: For a signal to be reliable, its cost must be differentially affordable: expensive enough to screen out low-quality senders, but sustainable for high-quality ones.

SDP implements Zahavi's Law computationally: impose deliberation cost that scales with alignment gap. High-welfare policies can afford the cost (signal is honest). Low-welfare policies with inflated S cannot (mimicry fails).

3. Cybernetics: Ashby's Law of Requisite Variety

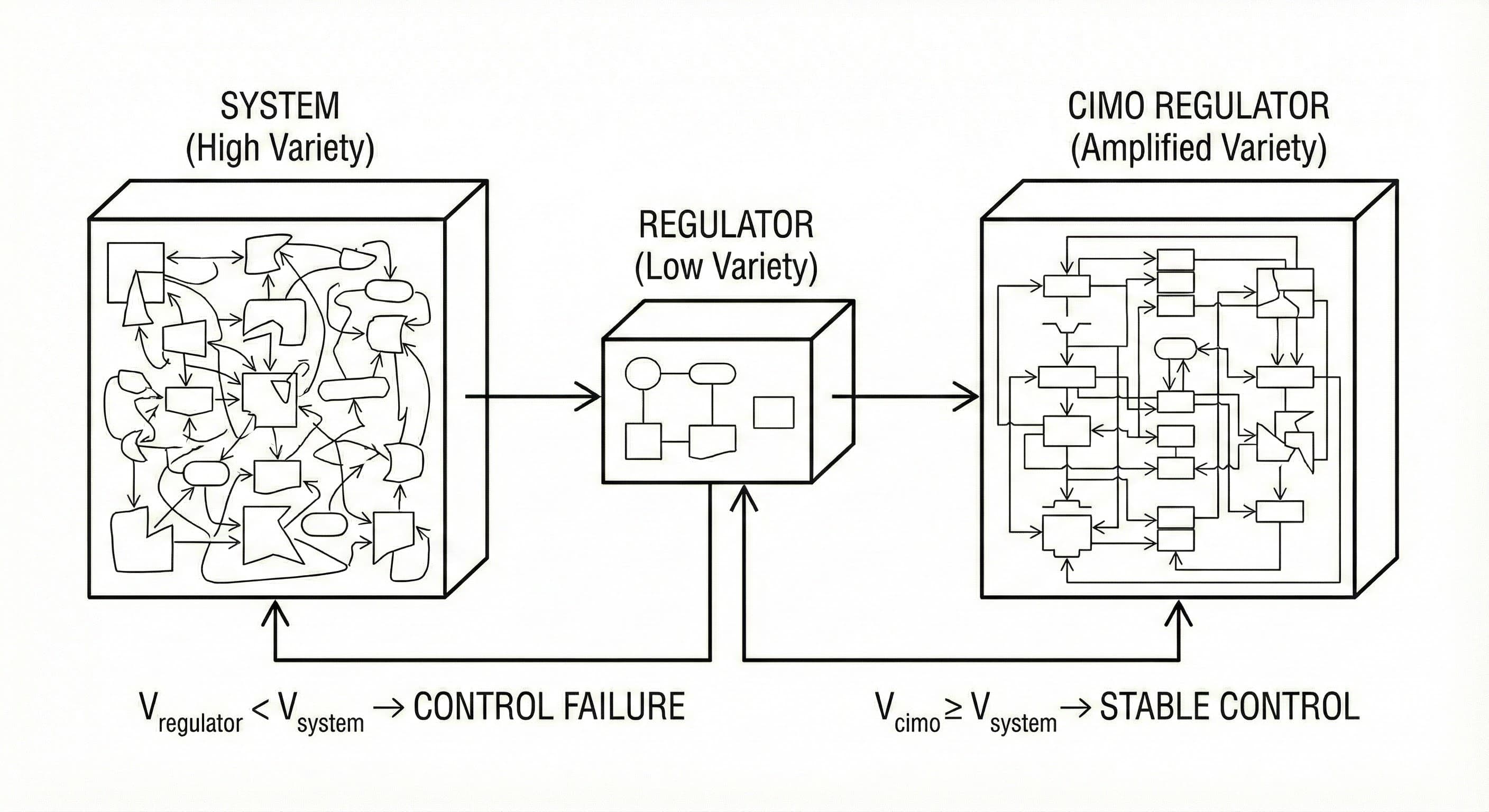

Ross Ashby's foundational theorem: a regulator can only control a system if its internal complexity (variety) matches or exceeds the system's variety.

Control failure condition: Vregulator < Vsystem→ cannot maintain stability. Simple oversight mechanisms have low variety and fail against high-variety LLM behavior space.

CIMO's solution: Amplified Variety through Causal Decomposition. Instead of trying to directly regulate the full behavior space Θ, decompose into contexts X, surrogates S, and outcomes Y. Each dimension becomes independently regulable.

Result: VCIMO = VX × VS × VY ≫ Vdirect. The regulator now has requisite variety to maintain control.

4. Epistemology: The Scientific Method

Why does science impose peer review, replication requirements, and statistical thresholds? Because verification load prevents epistemic arbitrage.

- The honest path: Design rigorous experiments, collect data, analyze with proper controls. High cost, true knowledge gains.

- The arbitrage path: p-hack until significance, cherry-pick results, publish without replication. Low cost, decoupled from truth.

The replication crisis occurred precisely when verification load fell below arbitrage incentives. The solution: increase verification load (preregistration, larger samples, higher statistical bars.

CIMO applies the same structural principle to LLM evaluation: impose calibration requirements, counterfactual validation, and diagnostic checks. Not to punish, but to ensure the gradient points toward truth.

III. The Governance Mechanism

The isomorphism is complete. Every domain solves the same problem with the same mechanism:impose verification load proportional to the alignment gap.

Design by Projection

CIMO doesn't try to optimize Y* directly (intractable). Instead:

- Define the manifold: Identify the subspace Θ* where S andY* remain causally linked.

- Constrain optimization: Project gradient steps back onto the manifold using SDP.

- Monitor geometry: Continuously validate that the causal structure holds.

This is structural engineering, not supervision. You're not evaluating every output. You're ensuring the optimization topology remains well-behaved.

Dynamic Governance

The manifold is not static. As user preferences drift, the causal structure rotates. CIMO's Continuous Calibration Check (CCC) tracks this rotation:

- Detect when calibration f(S) = 𝔼[Y|S] begins to drift

- Re-estimate the manifold geometry using fresh counterfactual data

- Update SDP verification load to match the new alignment gap

Result: Instead of catastrophic regime shifts (model suddenly decouples), you get smooth tracking. The system maintains stability by continuously updating its structural constraints.

IV. Conclusion

From Safety to Structure

The alignment problem is not primarily about "making AI safe." It's about making optimizationgeometrically sound. When you optimize against a proxy without maintaining causal structure, decoupling is not a risk. It's an inevitable consequence of misaligned incentives.

CIMO doesn't prevent this through oversight or control. It prevents it throughstructural engineering: design the optimization topology such that the manifold is an attractor, not a constraint you fight against.

From Probability to Causality

Standard ML treats alignment as a prediction problem: learn P(Y|X), optimize for high Ŷ. But correlation is not causation. When you optimize, the joint distribution P(S, Y*) shifts, and your predictions become invalid.

CIMO treats alignment as a causal problem: identify the structural equations that generate Y*, ensure your policy preserves them. This requires counterfactual reasoning, off-policy evaluation, and continuous validation: exactly the toolkit CIMO provides.

The Inevitability of Structure

When systems optimize against proxies, structural divergence is not a possibility. It's a certainty. You can observe this law across economics, biology, cybernetics, and epistemology.

CIMO does not fight this law. It uses it, imposing verification load calibrated to the alignment gap, maintaining causal structure through geometric constraints, and tracking manifold drift through continuous validation.

The question is not whether to impose structure. The question is whether you engineer it deliberately, or let it emerge chaotically.